Normalized Mutual Information to evaluate overlapping.

Normalized mutual information based registration using k-means clustering and shading. In this paper we use normalized mutual information (NMI) (Collignon, 1998, Collignon et al., 1995, Studholme et al., 1999, Viola, 1995, Viola and Wells III, 1995, Wells et al., 1995) as a measure for rigid 3D registration of clinical and artificial images. For a number of modalities NMI has proven to be a.

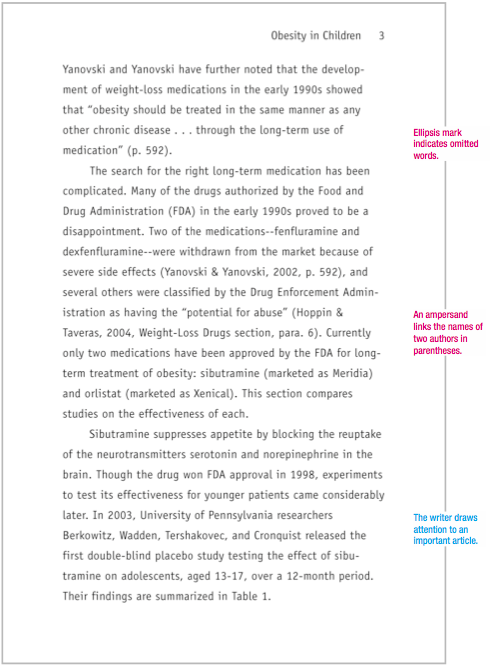

The Normalized Mutual Information NMI calculation is described in deflation-PIC paper with the applicable formula copied to the screenshot shown below. My question is specifically about the double summation of the numerator: why is it a double summation. My (apparently incorrect) intuition would be that the numerator would contain all of the.

In this paper by defining a new criterion for clusters validation named Modified Normalized Mutual Information (MNMI), a clustering ensemble framework is proposed. In the framework first a large number of clusters are prepared and then some of them are selected for the final ensemble. The clusters which satisfy a threshold of the proposed metric are selected to participate in final clustering.

A normalized measure is desirable in many contexts, for example assigning a value of 0 where the two sets are totally dissimilar, and 1 where they are identical. A measure based on normalized mutual information, (1), has recently become popular. We demonstrate unintuitive behaviour of this measure, and show how this can be corrected by using a more conventional normalization. We compare the.

How can I compare the following mutual information values ? I'm just wondering what's the most appropriate way to display them in my report table. I'm computing them with this formula. where e and c are clusters and the intersection is the number of elements in common. For each couple e and c I have a I value (mutual information). Successively.

Cluster analysis or clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense) to each other than to those in other groups (clusters).It is a main task of exploratory data mining, and a common technique for statistical data analysis, used in many fields, including pattern recognition, image analysis.

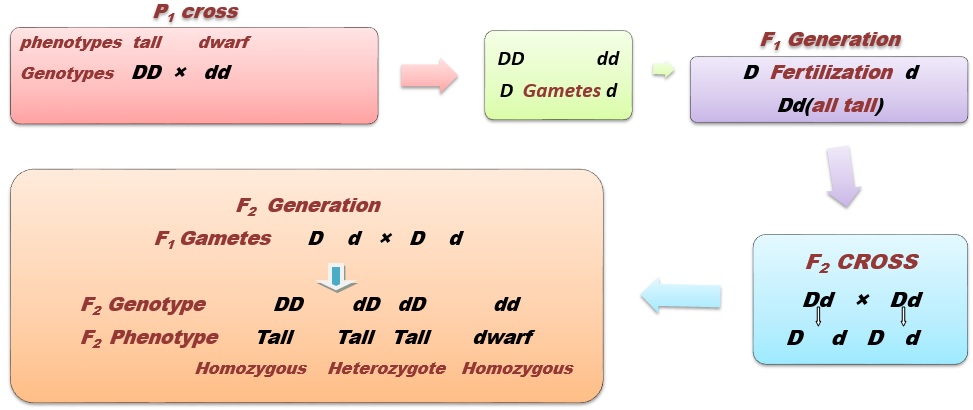

Clustering categorical data is an integral part of data mining and has attracted much attention recently. In this paper, we present k-ANMI, a new efficient algorithm for clustering categorical data.The k-ANMI algorithm works in a way that is similar to the popular k-means algorithm, and the goodness of clustering in each step is evaluated using a mutual information based criterion (namely.

Other posts on the site.

An encouraging result was first derived on simulations: the hierarchical clustering based on the log Bayes factor outperformed off-the-shelf clustering techniques as well as raw and normalized mutual information in terms of classification accuracy. On a toy example, we found that the Bayesian approaches led to results that were similar to those of mutual information clustering techniques, with.

For the problem about a large number of irrelevant and redundant features may reduce the performance of data classification in massive data sets, a method of feature automatic selection based on mutual information and fuzzy clustering algorithm is proposed. The method is carried out as follows: The first is to work out the feature correlation based on mutual information, and to group the data.

Fuzzy clustering ensemble based on mutual information. Key-Words: - Clustering Ensemble, Mutual Information, soft partitions 1 Introduction Clustering is the problem of partitioning a finite set of points in a multi-dimensional space into classes (called clusters) so that (i) the points belonging to the same class are similar and (ii) the points belonging to different classes are dissimilar.

Dependence-maximization clustering, Squared-loss mutual information, Least-squares mutual information, Model selection, Structured data, Kernel 1 Introduction Given a set of observations, the goal of clustering is to separate them into disjoint clusters so that observations in the same cluster are qualitatively similar to each other. K-means.

In this chapter, the hierarchical clustering algorithm, the k-means algorithm which is the most common relocation method and a relatively new clustering method, the two-step clustering algorithm, are discussed. For hierarchical clustering, the first step is to choose a statistic that quantifies how far apart (or similar) two cases are. Summary of these measures of similarity could be found in.

Clustering evaluation. This time I did clustering with DBSCAN and HDBSCAN. These are fairly simple yet effective clustering algorithms suitable for any kind of data. Additionally, I’ve implemented a method evaluating the results of clustering with variety of metrics: Adjusted Mutual Information Score, Adjusted Random Index, Completeness, Homogeneity, Silhouette Coefficient, and V-measure.